|

|

|

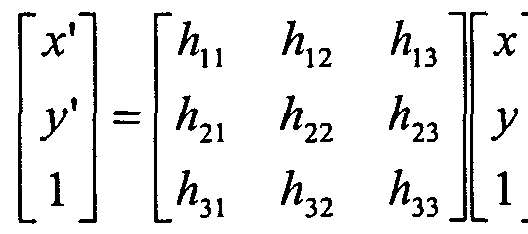

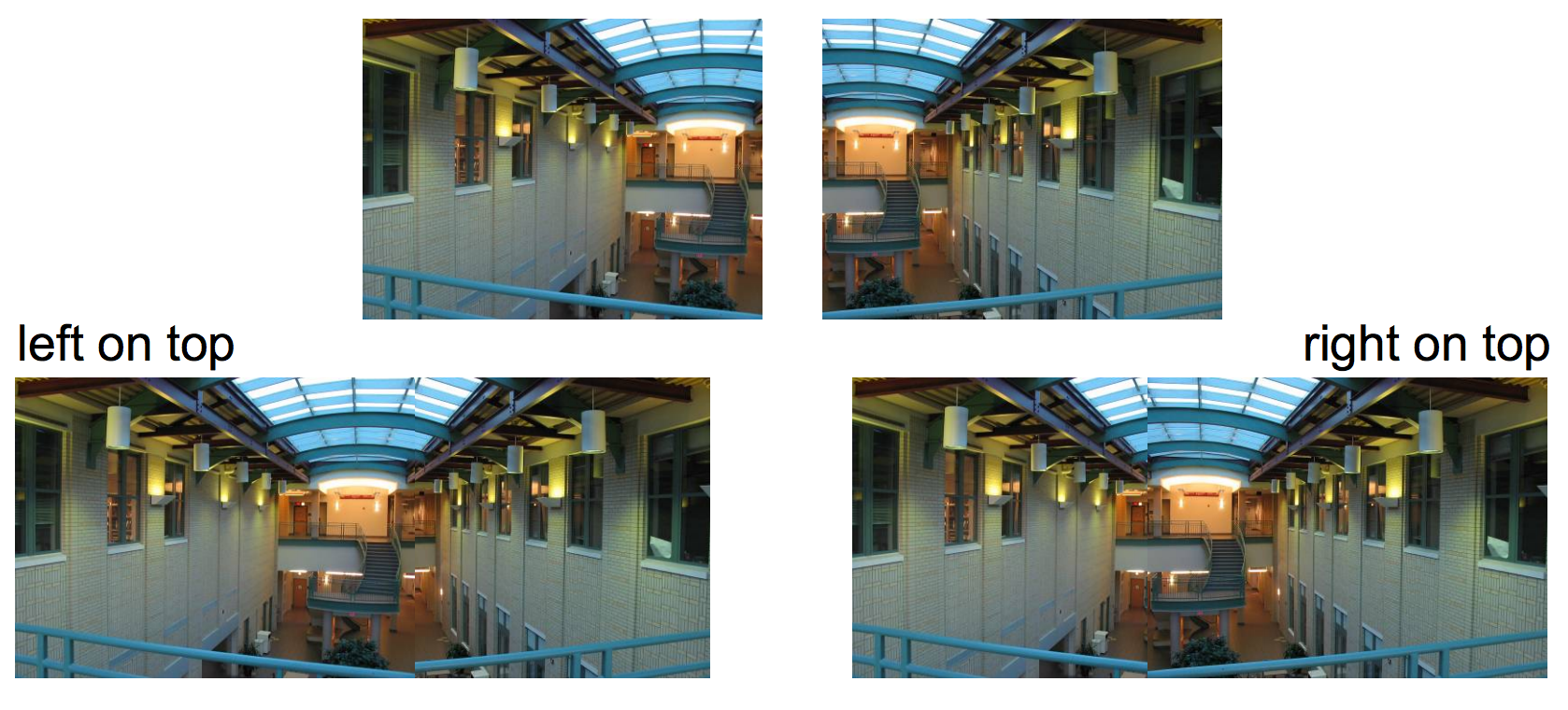

A view of the Palazzo lobby from my stay over the summer. |

The floor pattern looked rather interesting, let's take a closer look. |

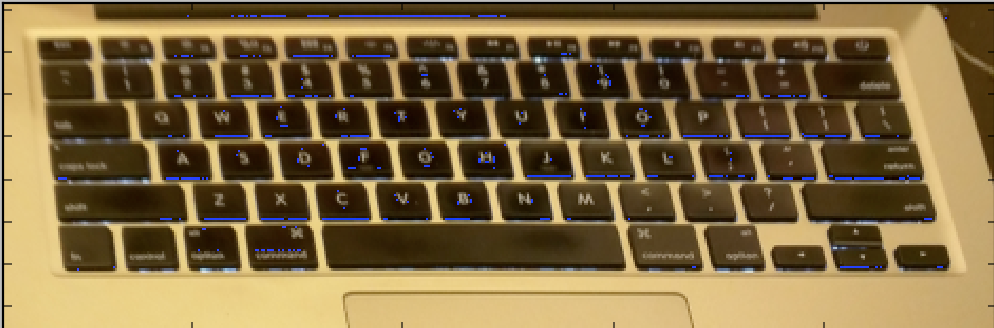

A front view of my Macbook. |

Hey, I can see my keyboard from way up here! |

|

|

|

|

|

|

|

|

|

|

|

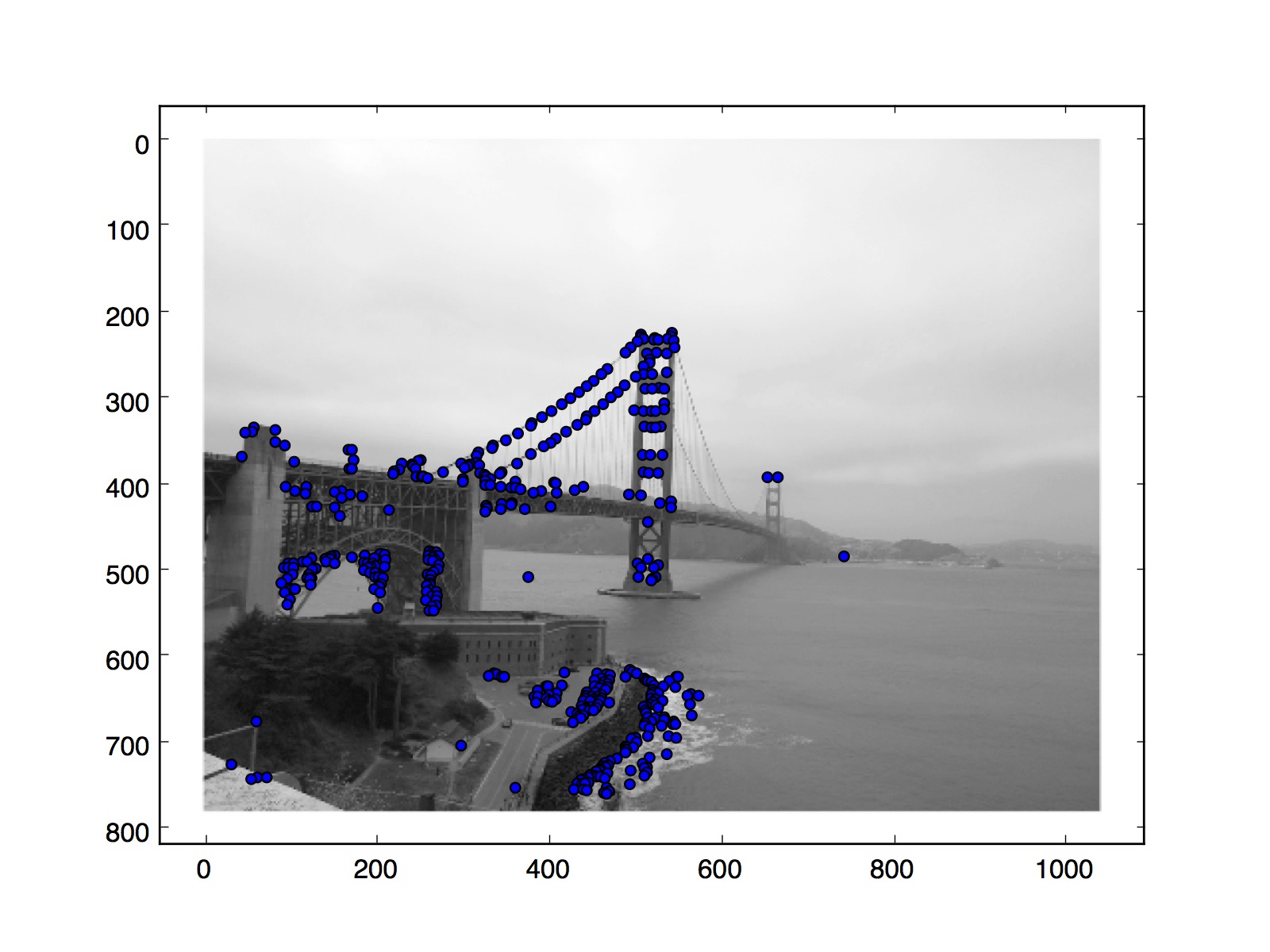

Golden Gate on the left |

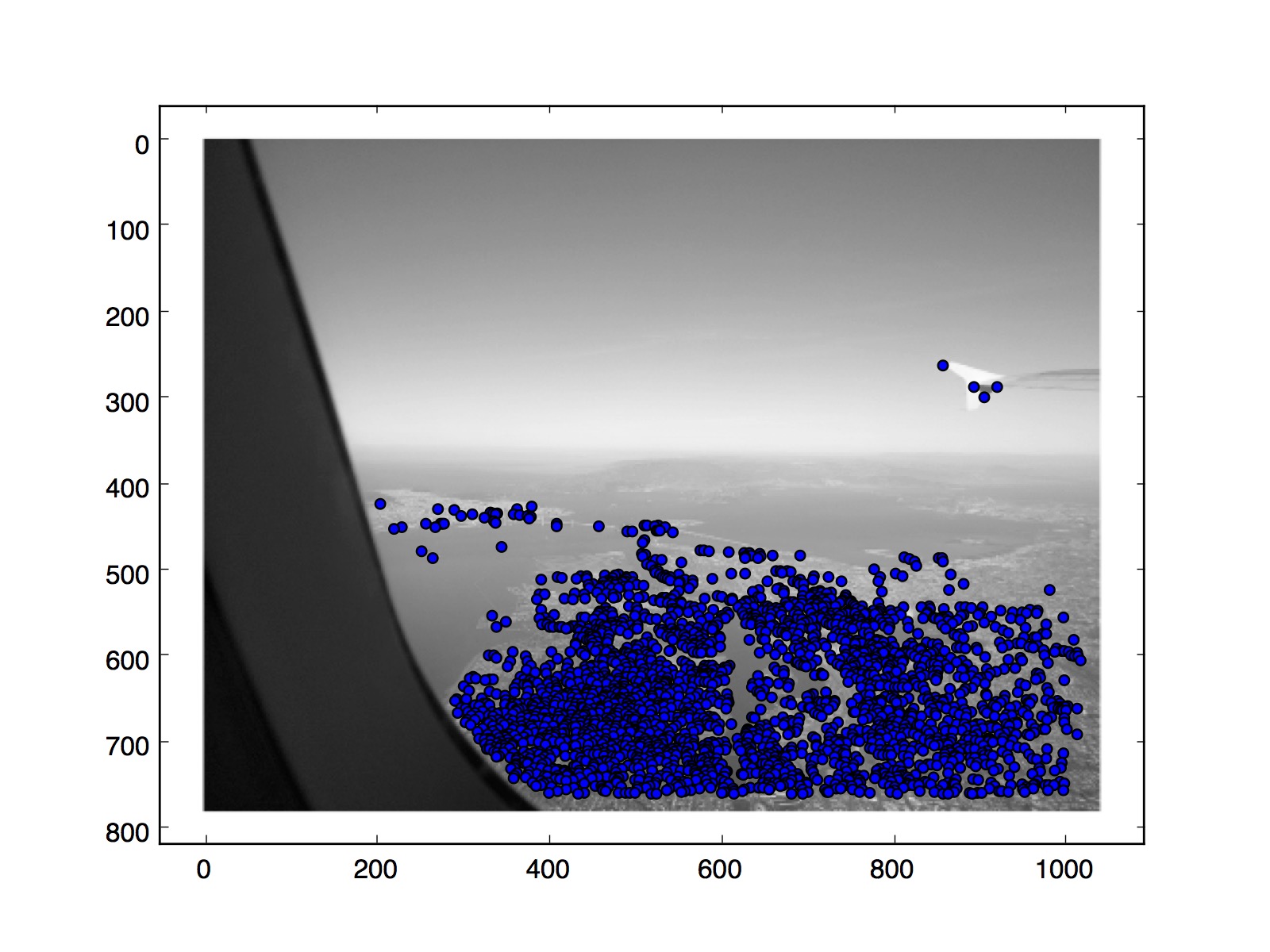

Golden Gate on the right |

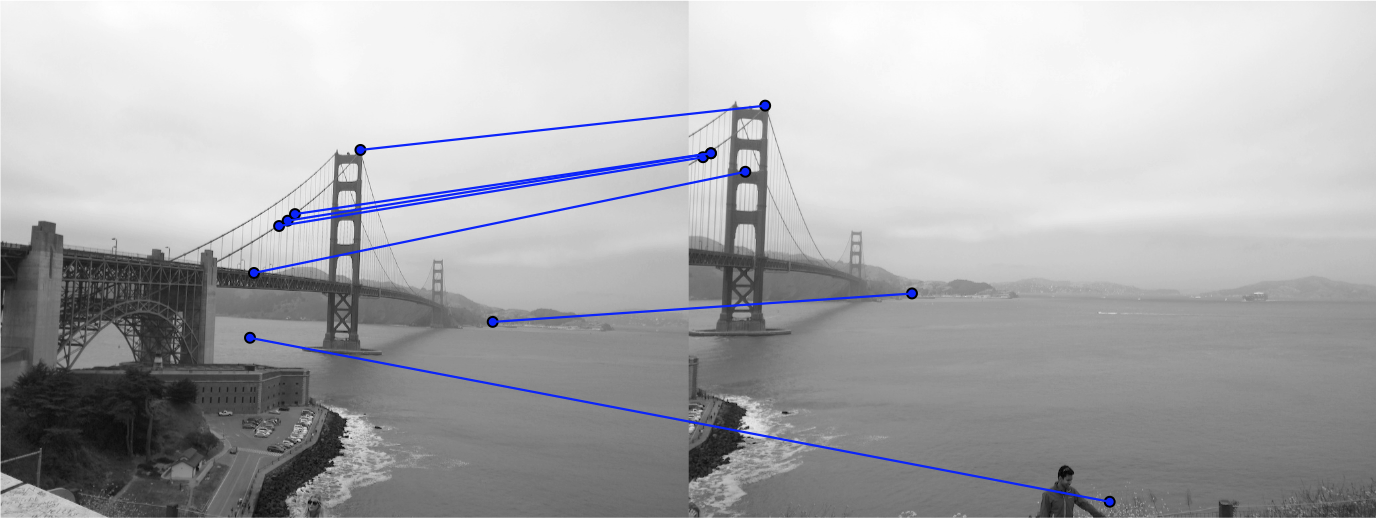

Golden Gate without blending |

Golden Gate Manual |

Golden Gate Google Photos |

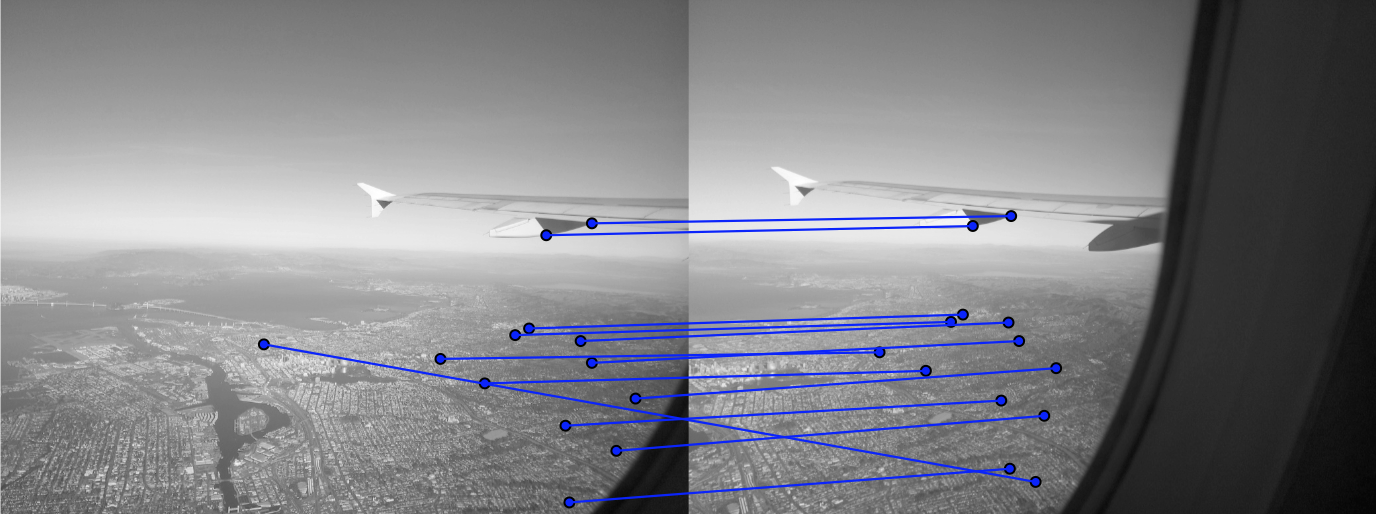

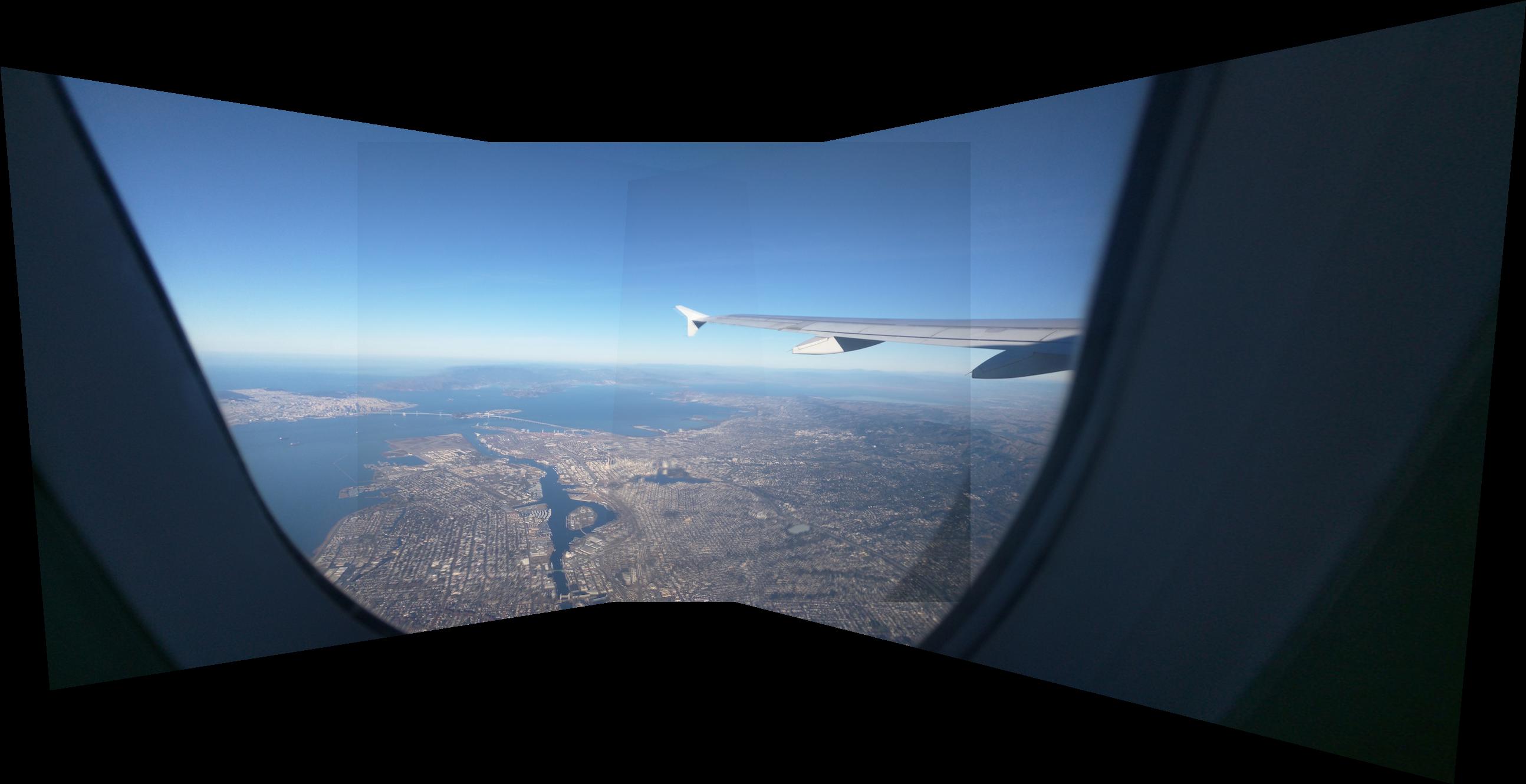

San Francisco Left |

San Francisco Center |

San Francisco Right |

San Francisco, linear blending |

San Francsico, Automatic |

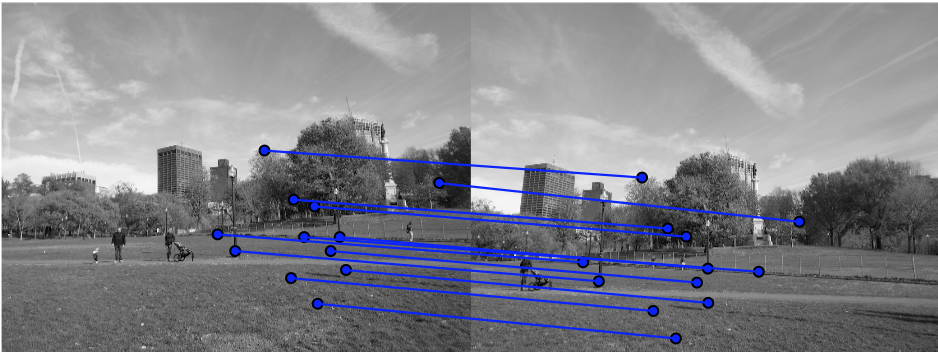

Boston Common, Left |

Boston Common, Center |

Boston Common, Right |

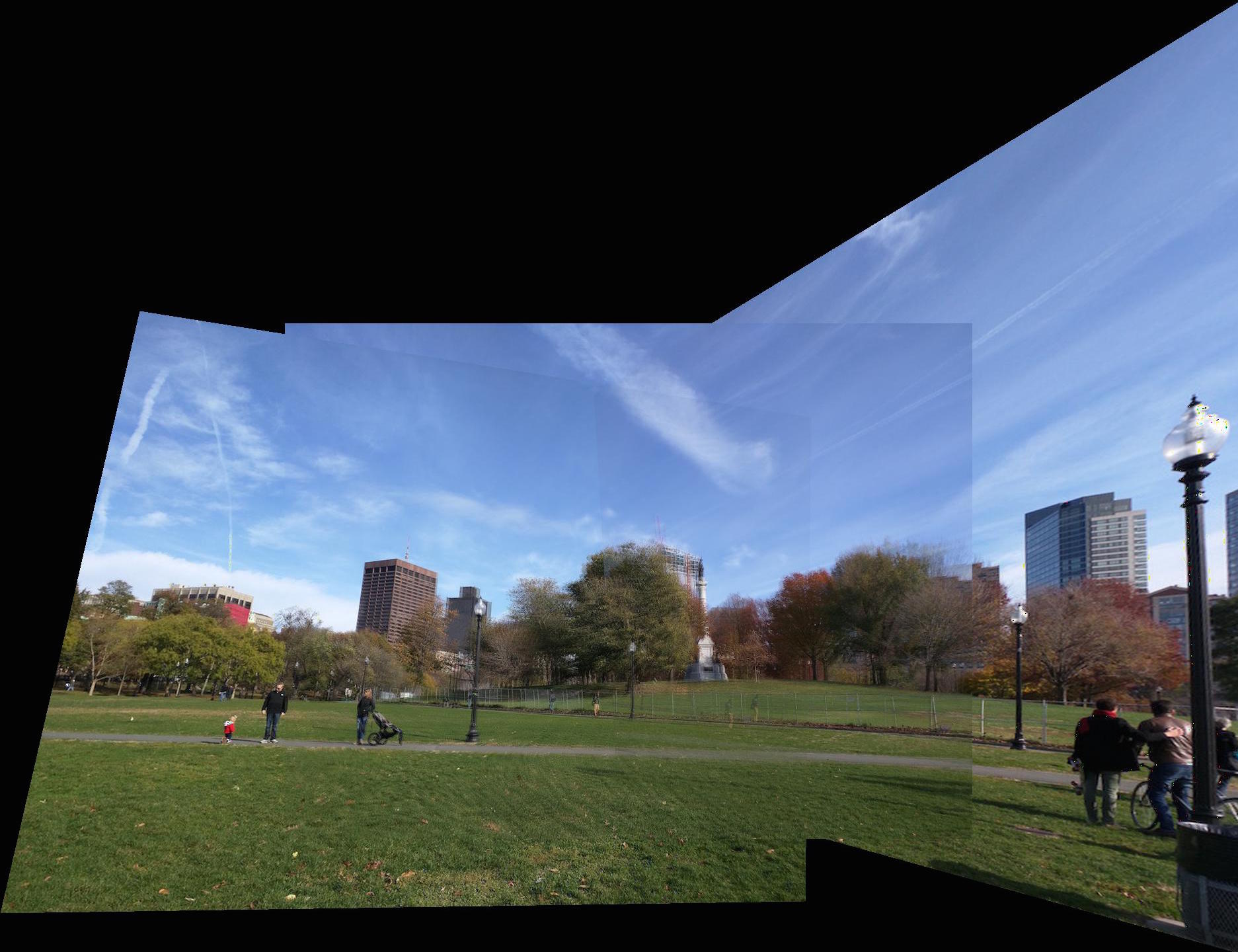

Boston Common, Manual |

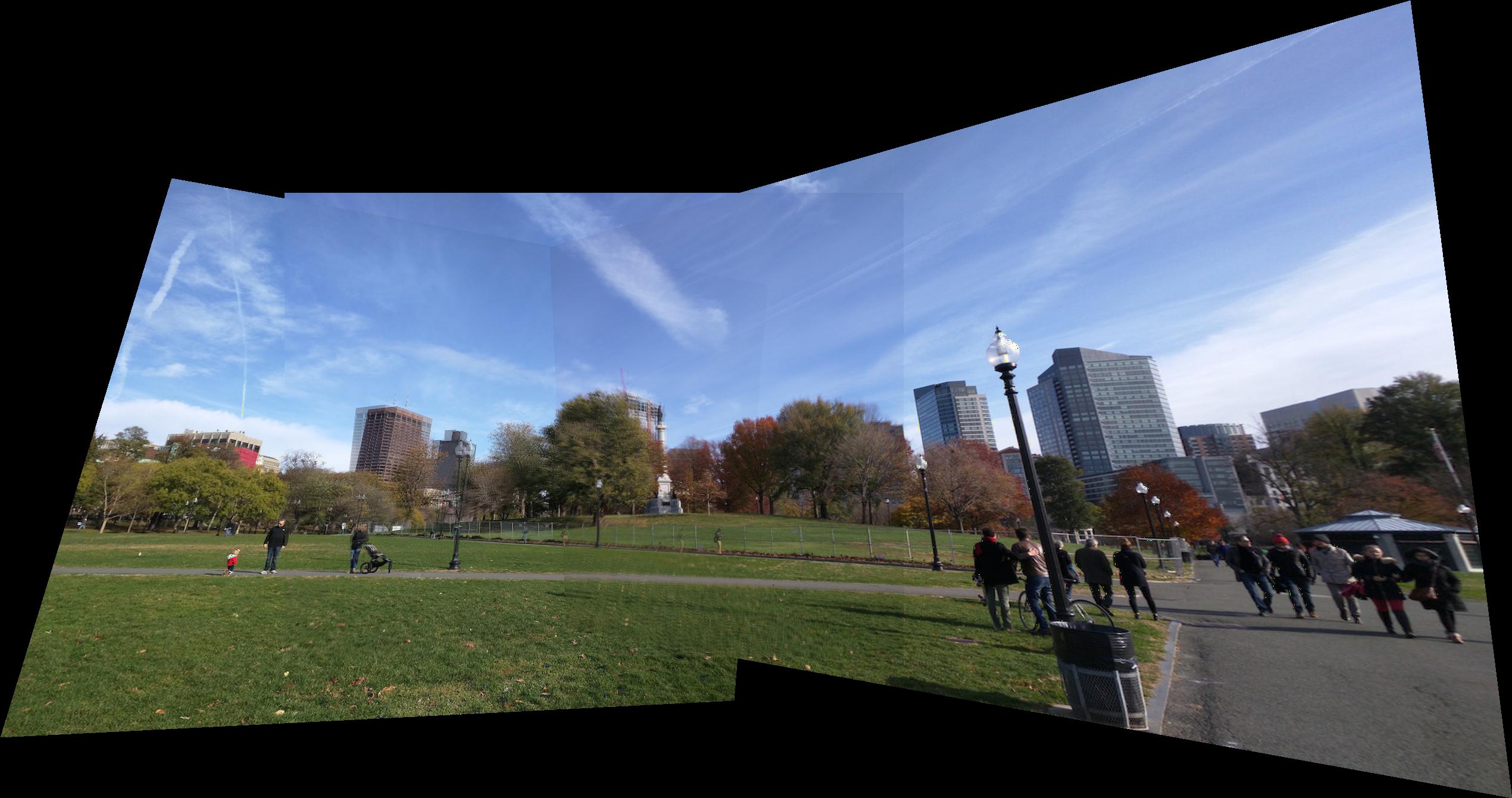

Boston Common, Automatic |

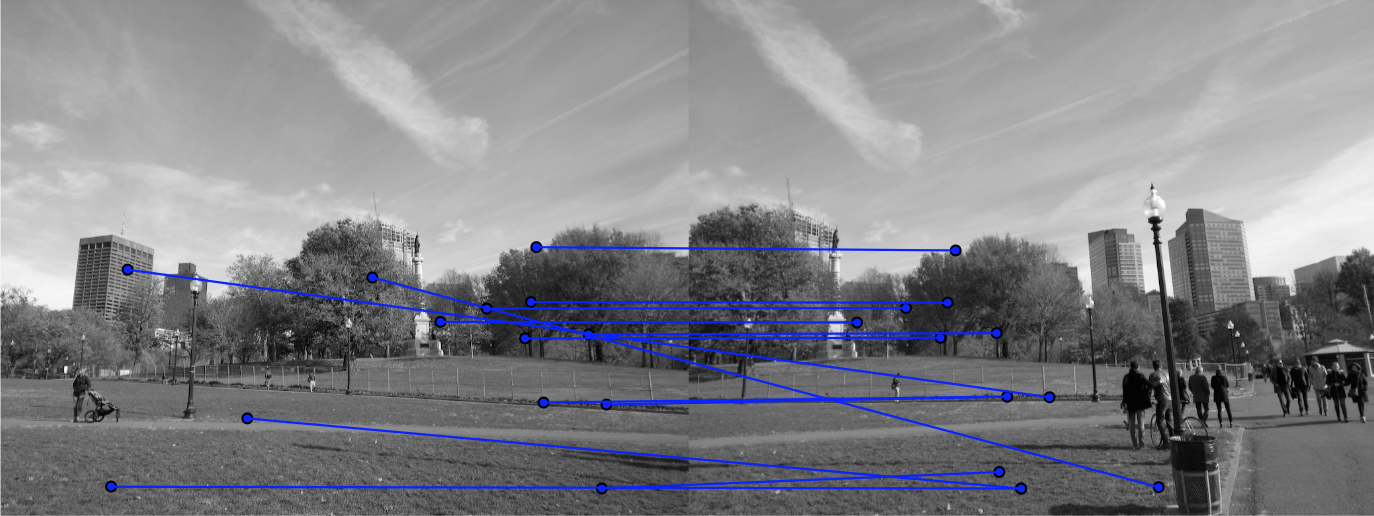

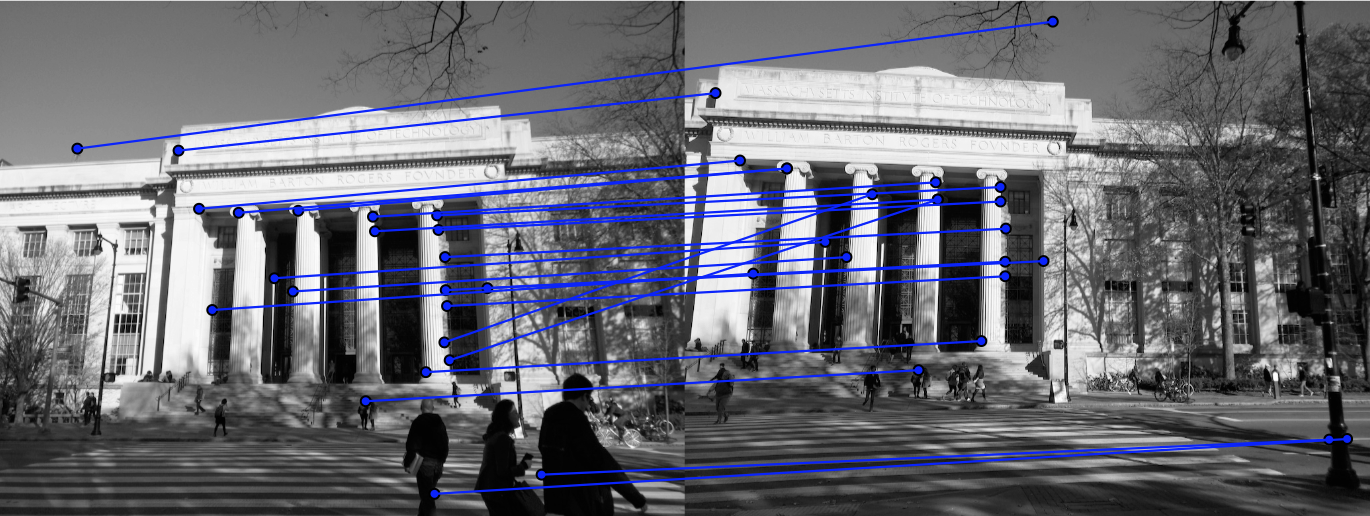

MIT, Left |

MIT, Center |

MIT, Right |

MIT, Manual |

MIT, Automatic |

|

|

|

|

|

|

|

|

|

|