|

|

((s_i - s_j) - (x_i - x_j)).((x_i - t_j) - (s_i - t_j)).

|

|

np.roll) into our source matrix and copy in the target gradients into our destination vector b.

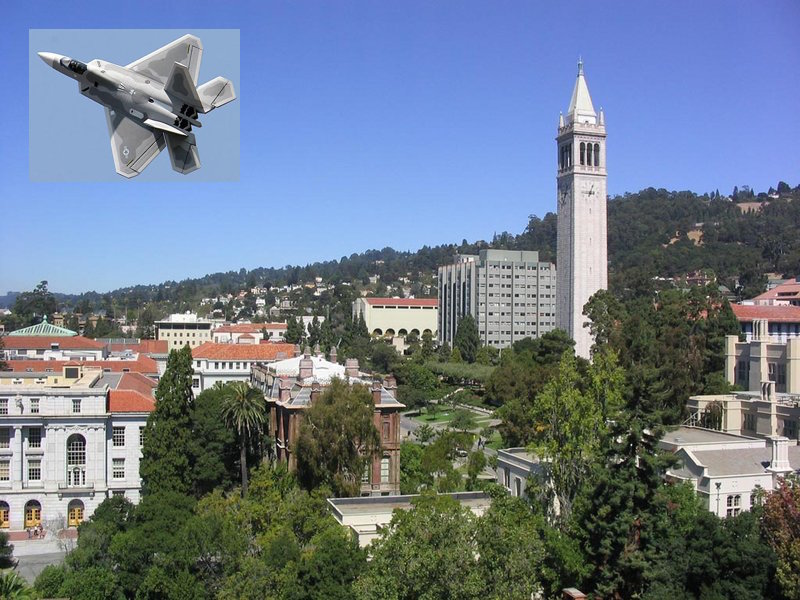

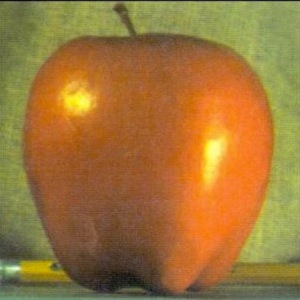

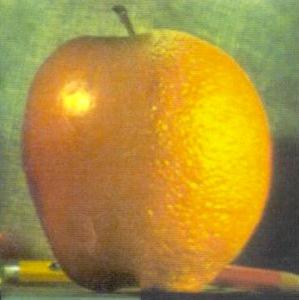

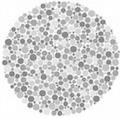

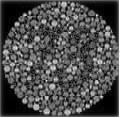

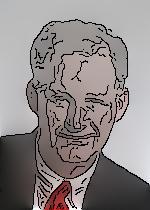

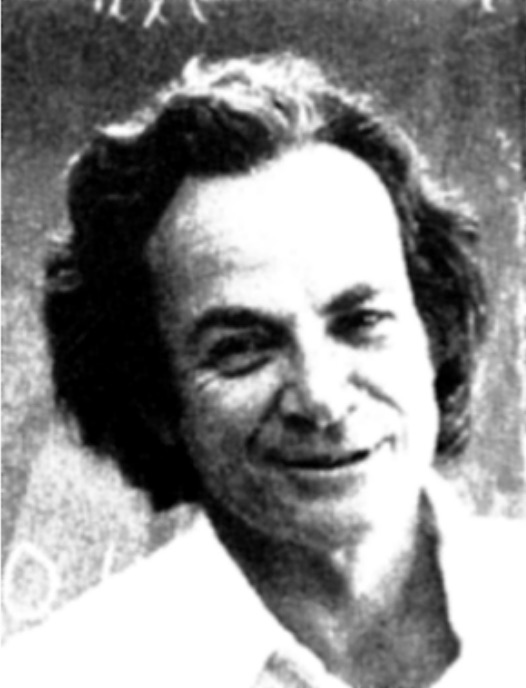

Optimizing with respect to the pixel values for each color channel individually, we obtain some pretty great results!

|

|

|

|

|

|

|

((x_i - t_j) - max{(s_i - s_j), (t_i - t_j)}).

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

error = alpha * overlap_error + (1-alpha) * correspondence_error;

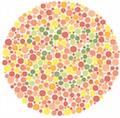

Here are some of the results!

|

|

|

|

|

|

alpha = 0.8 * (i - 1)/(num_iter - 1) + 0.1

The new error function is given by

error = alpha * (overlap_error + existing_error) + (1-alpha) * correspondence_error;

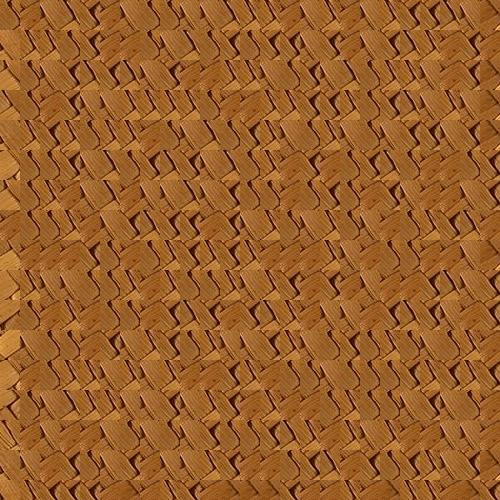

The progression of texture mapping results is shown below.

|

|

|

|

|

|

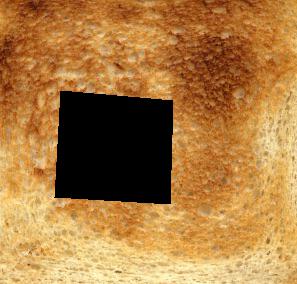

block_size,

block_size sized array of ones to determine the number of neighbors that each point has, then randomly select one above a certain threshold.

Once I've selected the point, I treat this point as the center then get the neighboring pixels. This is matched using an SSD distance metric against

other blocks (not sourced from the masked region) and the minimum distance block is used. As we can see, the results are passable but with smarter

priority functions and seam blending, the possibilities are certainly endless!

|

|

|

|

|

|

|

|

|